The Hidden AI Leadership Layer Running Your Business

How to manage AI adoption that's already happening across teams with little oversight

Thanks for reading AlphaEngage issue #113. Read past issues.

Inside: The emergence of departmental AI experimentation, why uncontrolled testing creates severe exposure, a framework for safe vs. risky AI usage, and how to build coordinated innovation without crushing momentum.

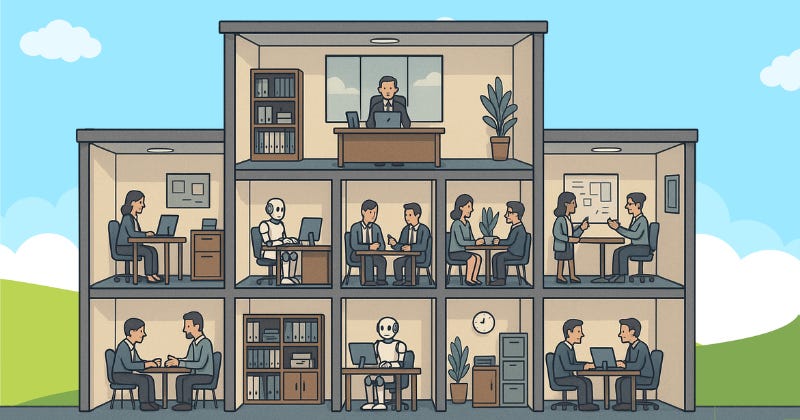

While you've been evaluating governance structures and executive roles, your managers have been quietly deploying AI tools that now handle everything from content creation to customer interactions to pipeline analysis.

Unfortunately, these weren't coordinated decisions. Instead, they were practical solutions to immediate problems that have collectively created an organizational AI footprint processing sensitive data, influencing customer relationships, and generating public-facing content without centralized oversight.

What began as departmental productivity experiments has evolved into operational dependencies with no assigned ownership.

The AI Risk Classification Framework

Rather than blocking experimentation or ignoring systemic risks, you need a framework that enables safe innovation while flagging genuine exposure. This system provides immediate clarity on what requires oversight.

🟢 Green Zone: Autonomous Experimentation

Brainstorming and ideation activities

Drafting internal documents for review

Analyzing publicly available information

Creating internal templates and frameworks

Learning and skill development with AI tools

🟡 Yellow Zone: Manager Approval Required

Generating content for external publication

Processing internal operational data for analysis

Using AI for customer communication or support

Creating training materials that reference company practices

Automating workflows that affect customer interactions

🔴 Red Zone: Executive/Legal Review Required

Processing customer data, employee records, or financial information

Generating content about competitive positioning or strategic planning

Using AI for contract language or legal document creation

Sharing information covered by regulatory requirements

Creating content that makes commitments on behalf of the company

The objective is clarity: teams can immediately identify what's safe to pursue independently versus what requires coordination or careful review.

The Visibility Gap

Most executives cannot answer fundamental questions about their organization's current AI usage, such as which processes depend on AI for daily operations, what sensitive data flows through external systems, which customer communications are AI-generated, and what would happen if these tools became unavailable tomorrow.

This isn't an oversight failure, though. Teams solved legitimate problems faster than governance frameworks typically develop. Now operations optimizes scheduling through AI, finance uses it for fraud detection, HR experiments with candidate screening, and legal departments rely on AI for contract review. The aggregate creates dependencies and exposure with no single person currently responsible for coordinating them.

Practical Governance Implementation

Deploy Clear Guidelines Immediately

Provide teams with actionable guidance they can reference quickly. Instead of directing them to "use AI responsibly," specify concrete boundaries: "Before using AI to generate external content, verify it contains no strategic information, competitive intelligence, or unverified claims that could create legal liability."

Establish Fast Review Processes

For activities requiring approval, provide decisions within 48 hours. Designate coordination contacts within each department and create streamlined forms focused on key risk factors rather than comprehensive documentation. The goal is sufficient oversight without friction that encourages circumvention.

Create Learning Mechanisms

When AI produces unexpected results, focus on process improvement rather than individual accountability. Most problems stem from insufficient training on AI limitations. Establish procedures for sharing best practices, reporting issues with AI-generated content, and updating guidelines based on experience.

Coordination That Scales

Assessment and Standards

Conduct a rapid assessment of current AI usage to identify immediate risks, then create regular forums for teams to share results and lessons learned. Build pathways for successful experiments to scale organization-wide through standardized tools and training programs based on proven implementations.

Strategic Integration

Develop measurement systems tracking both productivity gains and risk management effectiveness. Develop knowledge transfer processes to ensure AI insights benefit other teams. Focus on building organizational capability that compounds rather than isolated departmental solutions.

Executive Requirements

This distributed yet coordinated model creates new leadership requirements beyond traditional technology strategy. Executives need sufficient AI knowledge to make informed decisions about the balance between value and risk, various budgeting approaches for experimentation and training, and cultural frameworks that foster both innovation and effective oversight.

The combination requires understanding AI capabilities and limitations, allocating resources for guided experimentation, and implementing organizational learning systems that enhance governance based on practical results.

The Path Forward

Successful organizations combine team-level experimentation speed with executive-level risk coordination. This enables continued practical AI adoption within clear boundaries while creating mechanisms that ensure knowledge transfer, prevent system conflicts, and systematically build capability.

Companies implementing guided innovation frameworks now gain sustained advantages over those that either restrict experimentation or allow unrestricted expansion without adequate scaling preparation.

Your organization will indeed develop an AI strategy. The question is whether it will be strategically designed or accidentally accumulated. The choice determines whether AI creates a competitive advantage or organizational entropy.